Our Giving

We choose causes based on where we believe more funding will have the biggest impact on others.

Approach

We support ideas and institutions that make the world safer, healthier, and more prosperous for all. We’ve researched hundreds of causes and chosen our focus areas based on where we think more funding will help others the most.

This has led us to a diverse portfolio of causes, from proven programs that save lives in low-income countries and reduce animal suffering, to ambitious bets on potentially transformative innovations. We’re committed to using the best evidence we can find and approaching our work with rigor, curiosity, and compassion.

Focus Areas

-

![Group of children walking, with backs facing the camera]()

Global Health & Development

Supporting interventions that save and improve lives in low- and middle-income countries, from proven treatments to policy reforms.

What this looks like

- GiveWell-recommended charities: GiveWell estimates our funding has saved over 100,000 lives through interventions like medicine to prevent malaria, childhood vaccination programs, and vitamin A supplementation.

- Lead Exposure Action Fund: This collaborative fund — with partners like the Gates Foundation — works to eliminate lead poisoning, which causes irreparable harm to 1 in 2 children in low- and middle-income countries (LMICs). Grantees work to reduce lead exposure from sources like paint, cosmetics, and cookware.

- Air Quality: We support organizations working to reduce particulate air pollution in South Asia through policy advocacy, cleaner technologies, and better monitoring programs in some of the world’s most polluted cities.

- Global Growth: We’re exploring promising approaches to accelerate economic growth in LMICs, building on decades of research in development economics.

-

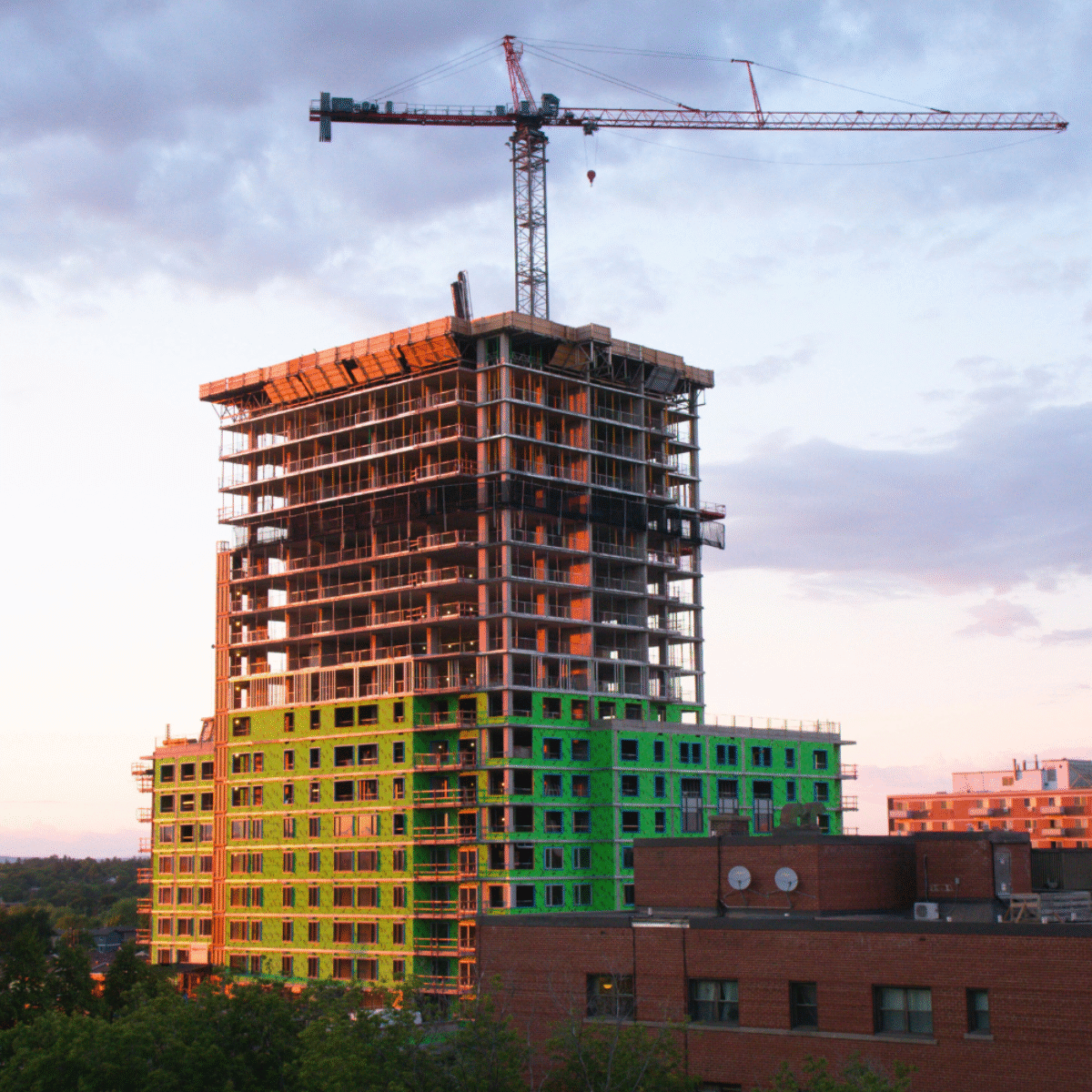

![Building under construction]()

Abundance & Growth

Accelerating economic growth and removing barriers to prosperity, primarily in the United States.

What this looks like

- Institute for Progress: IFP publishes research and advocates for policy reforms to increase the pace of scientific, technological, and industrial progress in the United States.

- California YIMBY: This advocacy organization helped advance SB 79, a landmark housing reform that was signed into law in 2025. The law created an estimated 16 million new units of zoned capacity — if just 5% are built, that would add 800,000 homes to a state facing a severe housing shortage.

- Institute for Replication: I4R runs replication workshops and works with journals to identify errors and publication bias in economics and other fields, encouraging transparency and improving trust in social science research.

-

![Woman in hazmat suit looking through microscope]()

Biosecurity & Pandemic Preparedness

Preventing and preparing for biological threats, from natural pandemics to engineered pathogens.

What this looks like:

- SecureBio: SecureBio’s Nucleic Acid Observatory uses computational approaches to detect biological threats in wastewater, aiming to identify new pathogens before they become pandemics.

- Blueprint Biosecurity: Blueprint guides the development of technologies and policies to prevent the spread of airborne disease. They’ve produced state-of-the-art reports on improving PPE to protect vital workers and using ultraviolet light to disinfect public spaces.

- Mirror Biology Dialogues Fund: MBDF works to facilitate constructive conversations about risks from mirror bacteria, which scientists have warned could be extremely dangerous if created. It funds conferences, workshops, and other convenings that bring together academic researchers, industry experts, and policymakers.

-

![Woman holding a syringe]()

Scientific Research & Global Health R&D

Advancing breakthrough research and developing new drugs and vaccines to improve the health of people around the world.

What this looks like

- David Baker: Our longtime grantee won the 2024 Nobel Prize in Chemistry for using AI to design new proteins. Baker and his colleague Neil King used these systems to develop an improved flu vaccine. They’ve also begun to develop vaccines for malaria, syphilis, and hepatitis C.

- Coalition for Global Hepatitis Elimination: Over 300 million people around the world are infected with Hepatitis B and C. CGHE expands access to testing and treatment in underdiagnosed regions, working toward eliminating viral hepatitis as a public health threat.

- Hospital for Sick Children: We funded a team of academics, led by Simon Draper and Angela Minassian, to develop a next-generation malaria vaccine that will offer longer-lasting protection with fewer doses. The vaccine could reach large-scale human trials by 2030, years ahead of other efforts.

-

![Group of students]()

Navigating Transformative AI

Helping humanity prepare for a safe transition to advanced AI, with a focus on preventing worst-case outcomes through research and policy work.

What this looks like

- FAR AI: Conducts technical AI safety research, focusing on red-teaming and on techniques that use access to AI models’ internal workings to understand how well we can predict or steer their behavior.

- BlueDot Impact: Offers free AI safety courses to professionals across many fields, from technical staff at AI labs to government policymakers. So far, they’ve trained over 4,500 people and helped hundreds transition into AI safety careers.

-

![Two chickens]()

Farm Animal Welfare

Improving lives for billions of animals raised on factory farms through corporate reforms, technological innovation, and humane alternatives.

What this looks like:

- Open Wing Alliance: Since 2016, this international coalition has secured over 2,500 corporate commitments to use only cage-free eggs, sparing hundreds of millions of hens from confinement in tiny battery cages.

- Egg-Tech Prize: We co-sponsored this $6 million program to accelerate technology that determines chick gender before hatching, preventing the mass slaughter of male chicks. The technology has become widespread in Europe and recently launched in the U.S., preventing the inhumane killing of over 200 million chicks so far.

-

![Team working together]()

Additional Areas

Strengthening the broader ecosystem of people and organizations tackling global challenges.

- Forecasting: We fund efforts to enable high-quality forecasts on questions relevant to high-stakes decisions. Grantees include the Forecasting Research Institute, co-founded by forecasting expert Phil Tetlock, and Metaculus, a platform that aggregates predictions from thousands of forecasters on critical future events.

- Effective Giving & Careers: We support organizations that help people make more informed choices about their careers and charitable giving. This includes groups like Giving What We Can, which has over 10,000 members who’ve pledged to give 10% of their income to effective charities, and Ambitious Impact, an incubator that has launched dozens of new nonprofits addressing neglected global issues.